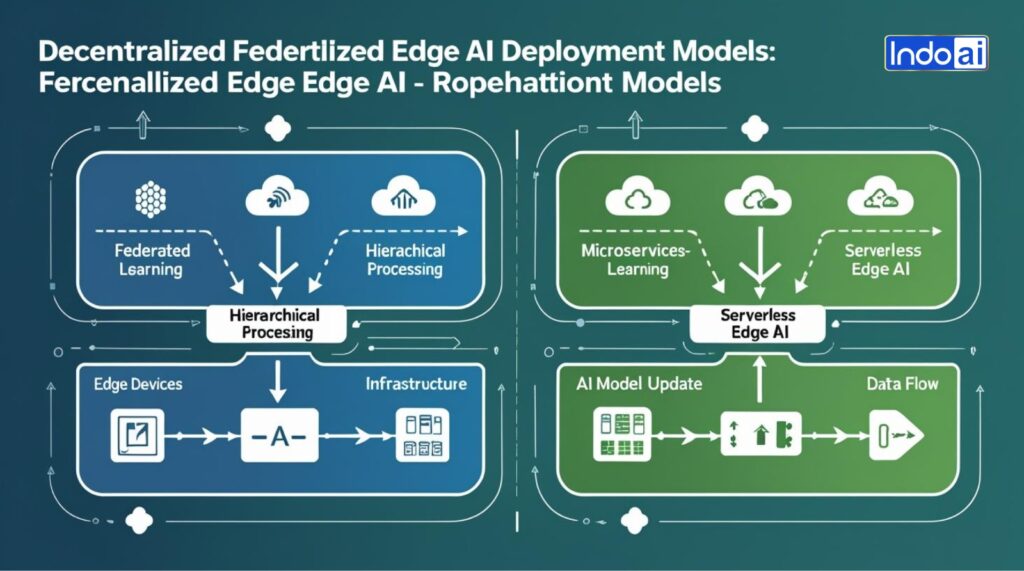

Abstract: The advent of edge computing and artificial intelligence (AI) has spurred the development of decentralized architectures to address privacy, latency, scalability, and cost challenges in AI deployment. This paper provides an in-depth examination of four architectures—Federated Learning (FL), Edge-Cloud Hierarchical, Microservices-Based Edge AI, and Serverless Edge AI—focusing on their components, workflows, advantages, challenges, and use cases. A comparative analysis evaluates their suitability across application domains, technological requirements, usefulness, privacy, scalability, latency, and cost-effectiveness, supported by a comprehensive review of recent literature and industry implementations. The findings highlight FL’s primacy in privacy-sensitive training, Edge-Cloud’s efficacy in latency-critical tasks, Microservices’ modularity for complex systems, and Serverless’ cost-efficiency for event-driven applications. Integrating these architectures with visionLLMs/VLM is on the horizon to train and develop various AI Models.

Keywords: Federated Learning (FL), Edge-Cloud Hierarchical, Microservices-Based Edge AI, Serverless Edge AI, vision LLM, edge AI, AI Camera, AI Models

Excerpt:

6 Comparative Analysis

The four architectures differ in their suitability for specific applications, technological requirements, usefulness, and additional factors (e.g., privacy, scalability, latency, cost). This section provides a rigorous comparison, supported by metrics and case studies.

6.1 Application Domains

- Federated Learning:

- Suitability: Privacy-sensitive training [76][77] (e.g., healthcare, smartphones).

- Case Studies: NVIDIA Clara FL (faster convergence[77] in medical imaging) (NVIDIA, 2021), Google Gboard[78] (25% better text prediction).

- Strength: Ideal for GDPR[79]/DPDP Act-compliant scenarios.

- Limitation: Limited to training[80], not real-time inference due to communication latency and complex synchronization processes between devices.

- Edge-Cloud Hierarchical:

- Suitability: Latency-critical hybrid tasks (e.g., autonomous vehicles, industrial IoT). Latency at the edge is as low as 9-13ms, compared to fog (23-29ms) and cloud (117-125ms), ensuring suitability for time-critical IoT tasks[81]

- Case Studies: The FSD computer is capable of processing up to 2,300 frames per second, a 21x improvement over Tesla’s previous hardware and at a lower cost[82].

- Strength: Balances real-time and analytical workloads[34].

- Limitation: Less suited for fully decentralized scenarios.

- Microservices-Based Edge AI:

- Suitability: Modular, multi-task AI systems (e.g., video analytics, retail). Agentic AI can autonomously compose, coordinate, and optimize microservices across edge/cloud infrastructures[83]

- Case Studies: Amazon Go (faster deployment [55]), Hikvision surveillance (30% better reliability).

- Strength: Supports complex, scalable pipelines. The AI-driven system achieves[84] up to a 27.3% reduction in latency during traffic surges and improves throughput by 25.7%, while also reducing CPU and memory usage by up to 25.7% and 22.7%, respectively. The study[84] suggest that AI-driven optimization offers a scalable and efficient solution for managing microservices in highly dynamic environments.

- Limitation: Resource-intensive for low-power devices.

- Serverless Edge AI:

- Suitability: Event-driven[85], low-frequency tasks (e.g., smart homes, surveillance).

- Case Studies: Alexa (cost reduction), Ring cameras (rapid deployment).

- Strength: Scalability & Cost-efficient[86] for sporadic workloads.

- Limitation: Cold start latency limits continuous tasks.

6.2 Technological Requirements

- Federated Learning:

- Stack: TensorFlow Federated, PySyft, SMPC, Differential Privacy[87] [88].

- Infrastructure: Edge devices, cloud server, secure communication.

- Complexity: High (privacy mechanisms, heterogeneity).

- Edge-Cloud Hierarchical:

- Stack: TensorFlow Lite, ONNX, MQTT/HTTP.[89]

- Infrastructure: Edge devices, fog nodes, cloud servers.

- Complexity: Moderate (multi-layer orchestration).

- Microservices-Based:

- Stack: Docker, Kubernetes, Istio, REST/gRPC.[90]

- Infrastructure: Edge nodes, orchestration clusters.

- Complexity: High (Kubernetes expertise).

- Serverless Edge AI:

- Stack: AWS Greengrass, Azure Edge Functions, Lambda@Edge.[91]

- Infrastructure: Edge devices, serverless platforms.

- Complexity: Low (platform-managed).

6.3 Usefulness

- Federated Learning: High for privacy-critical training, with 30% faster convergence in healthcare[92] (NVIDIA, 2021).

- Edge-Cloud Hierarchical: On average it reduced the overload impact on the network usage by approximately 11 percent while maintaining acceptable delays for latency-sensitive applications[93]. Thus, High for latency-sensitive tasks, achieving <10ms edge latency.

- Microservices-Based: High for modular systems, with much faster updates.

- Serverless Edge AI: High for cost-sensitive tasks, with reasonable cost savings .

6.4 Additional Factors

- Privacy:

- FL: Highest, with on-device data.

- Edge-Cloud: Moderate, due tocloud data transfers.

- Microservices: Moderate, with service mesh risks.

- Serverless: Lowest, due to vendor dependency.

- Scalability:

- FL: Excellent for millions of devices [94].

- Edge-Cloud: Good, limited by fog layer.

- Microservices: Excellent with Kubernetes.

- Serverless: Good, constrained by platform limits.

- Latency:

- FL: Not applicable (training-focused).

- Edge-Cloud: Lowest (<10ms).

- Microservices: Moderate (10–50ms API delays).

- Serverless: Moderate (100–500ms cold starts).

- Cost:

- FL: High setup, low operation.

- Edge-Cloud: Moderate, with edge savings.

- Microservices: High (orchestration overhead).

- Serverless: Lowest (pay-per-use)[ 95].

- Ease of Deployment:

- FL: Complex (privacy, heterogeneity).

- Edge-Cloud: Moderate (multi-layer).

- Microservices: Complex (Kubernetes).

- Serverless: Easiest (platform-managed).

6.5 Summary Table

| Criteria | Federated Learning | Edge-Cloud Hierarchical | Microservices-Based | Serverless Edge AI |

| Application | Privacy-sensitive training | Latency-critical hybrid tasks | Modular multi-task AI | Event-driven tasks |

| Tech Stack | TensorFlow Federated, SMPC | TensorFlow Lite, MQTT | Docker, Kubernetes | AWS Greengrass, FaaS |

| Privacy | High | Moderate | Moderate | Low |

| Scalability | Excellent | Good | Excellent | Good |

| Latency | N/A | Low (<10ms) | Moderate (10–50ms) | Moderate(100–500ms) |

| Cost | High setup, low operation | Moderate | High | Low |

| Ease | Complex | Moderate | Complex | Easy |

| Metrics | 30% faster convergence | <10ms latency | 50% faster updates | 40% cost savings |

- Discussion

A 2018 study [104] concurs that (a) the core cloud-only system outperforms the edge-only system having low inter-edge bandwidth, (b) a distributed edge cloud selection scheme can approach the global optimal assignment when the edge has sufficient compute resources and high inter-edge bandwidth and (c) adding capacity to an existing edge network without increasing the inter-edge bandwidth contributes to networkwide congestion and can reduce system capacity.

NVIDIA Clara Federated Learning [96] uses distributed training across multiple hospitals to develop robust AI models without sharing personal data. Federated Learning [76] excels in privacy-sensitive training, making it ideal for healthcare (e.g., like Apollo Hospitals under DPDP Act 2023) and smartphones (e.g., Xiaomi’s vernacular models). Its scalability supports large-scale deployments, but it is not suited for real-time inference[113]. Edge-Cloud Hierarchical[97] is optimal for latency-critical applications, such as autonomous vehicles (e.g., Tata Motors) and smart cities (e.g., India’s Smart Cities Mission), balancing edge and cloud strengths. Microservices-Based Edge AI suits complex, modular systems, divides big apps into smaller services, like video analytics (e.g., Reliance Retail’s surveillance) and smart retail, but its resource demands limit edge applicability. Serverless Edge AI is best for event-driven[98], cost-sensitive tasks, such as smart homes (e.g., Jio IoT) and surveillance, though cold start latency is a constraint[99].

In India, FL aligns with privacy regulations, Edge-Cloud supports infrastructure growth (e.g., 5G rollout), Microservices enables retail innovation[100] and Serverless offers cost-effective IoT solutions[91]. Stakeholders should select architectures based on application needs—privacy for FL, latency for Edge-Cloud, modularity for Microservices and cost for Serverless.

- Future Directions

- Federated Learning: Advances in 5G and differential privacy will reduce communication overhead and enhance privacy security[134].

- Edge-Cloud Hierarchical: 6G and AI-native networks will further lower latency [130]

- Microservices-Based: Lightweight orchestrators (e.g., K3s) will improve edge efficiency[135].

- Serverless Edge AI: Open-source FaaS platforms (e.g., OpenFaaS) will mitigate vendor lock-in[136-137]

India Context: The edge ai market in India is expected to reach a projected revenue of US$ 3,656.6 million by 2030. These architectures can drive Digital India, Ayushman Bharat and smart city initiatives[101].

Following full paper can be accessed from

- https://jscglobal.org/volume-14-issue-6-june-2025/

- https://www.researchgate.net/publication/392450561_Smarter_at_the_Edge_Evaluating_Decentralized_AI_Deployment_Models_in_Federated_Hierarchical_Microservices_and_Serverless_edge_AI_Architectures